The Evolution of AI in ADAS 2.0

One of the most compelling factors driving autonomous vehicles is the reduction of fatalities and accidents. More than 90% of all car accidents are caused by human failures. Self-driving cars play a crucial role in realizing the industry vision of “zero accidents.” Beyond addressing the high power consumption typically associated with the high-performance AI computing required to achieve “just” level 3 ADAS, (the power goes up considerably more for L4 & L5), the fully self-driving car must address some additional really hard challenges: It needs to be able to learn, see, think and then act. And it must do so in a wide range of weather conditions, road conditions, lighting conditions, and also do so flawlessly in a wide range of traffic scenarios.

For the vehicle to “see” (perception) and ultimately understand its surroundings, the vehicle employs a mix of different sensors which includes cameras, radar, and LIDAR (light detection and ranging). While controversy exists in the industry regarding the need for LIDAR, reduced price points and learnings from many millions of miles of trials are leading to broader industry adoption. The reason different types of sensors are employed is to offset the limitations inherent to a given sensor. - i.e. cameras don’t see well in the dark, so by creating a composite image by combining the LIDAR sensor (which works well at night) with the camera sensor, the vehicle can see at night. Unlike cameras and LIDAR, radar is not affected by fog. When radar is combined with the other sensors, the vehicle can now “see” in a wider range of driving conditions. The combining of data from the different sensors is known as sensor fusion.

The AI processing performance required for sensor fusion is relatively modest when compared to the AI performance required for perception. The Convolutional Neural Network (CNN), which is typically employed in sensor fusion, is a form of a deep learning neural network commonly used in computer vision. While the AI processing required to address aspects of sensor fusion may be lightweight, addressing other aspects of sensor fusion are quite complex.

To illustrate that point, today there are vehicles on the road that employ 11 cameras, 1 long-range radar, and 1 LIDAR, in addition to 12 ultrasonic sensors (radar). It’s important to note this collection of sensors and sensor types is employed just to achieve Level 2+ ADAS. Successfully creating a composite image through the fusion of this large number of disparate sensors is a very challenging task. Not only does the aggregated data rate of all these different sensors present significant dataflow and data processing challenges, but the cameras typically have different resolutions, frame rates, and operate asynchronously. These challenges are further compounded when fusing the LIDAR and radar images with the camera images.

4 approaches to sensor fusion commonly employed to yield a composite image include:

- Early fusion

- Mid-level fusion

- Sequential fusion

- Late fusion

Each approach describes the point when the fusion of the different sensors occurs within the fusion signal processing chain. Early fusion typically delivers higher precision at the expense of completeness of scene coverage whereas late fusion offers more coverage of the environment at the expense of precision. Mid-level and sequential fusion offers more balanced trade-offs between coverage and precision. Once fusion has been completed, the composite image is sent to the Perception engine which is responsible for making sense of the fused image.

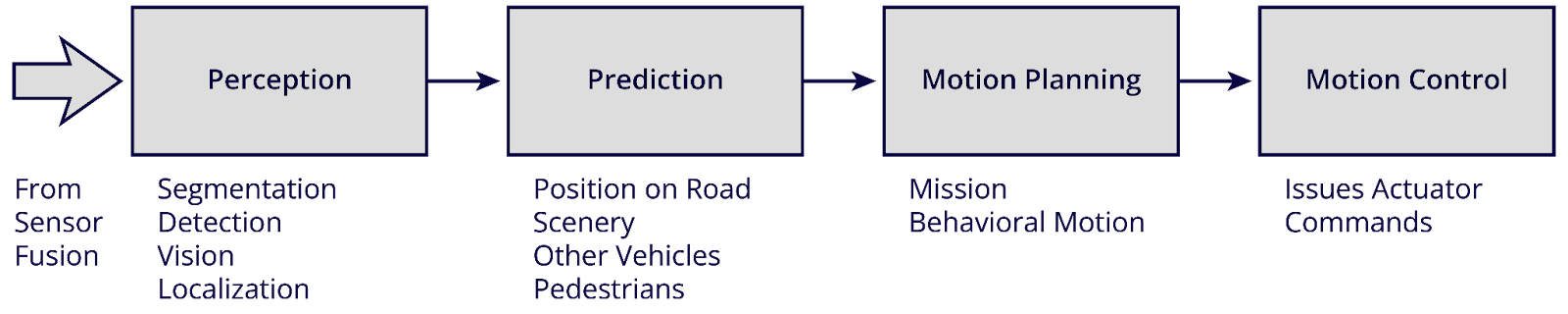

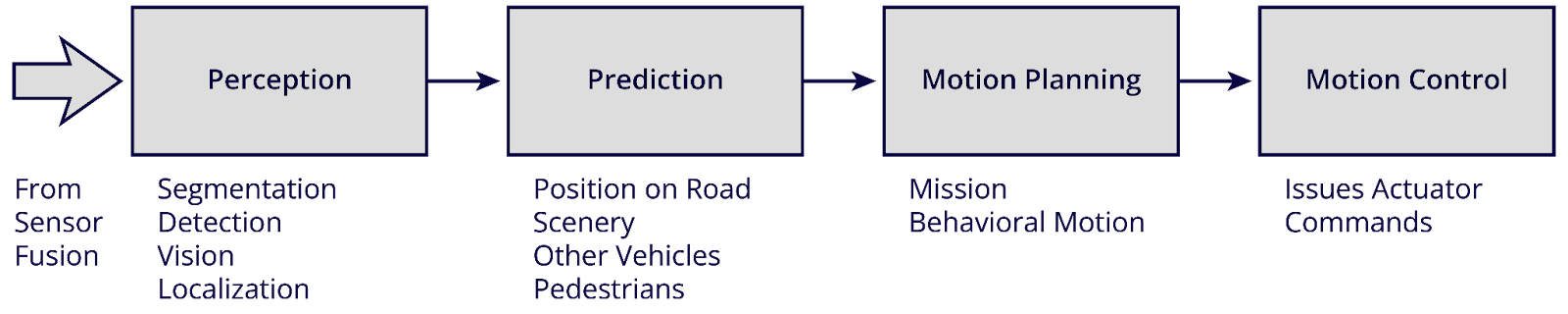

As shown, the autonomy workload consists of 4 major tasks: perception, prediction, planning, and control. Level 4 autonomy, where a vehicle can operate in self-driving mode with minimal human interaction, requires autonomous driving tasks (e.g. scene understanding, motion detection, image inference tasks, etc.) to be executed continuously with significant consideration for functional safety. While the above signal chain shows a linear signal flow from left to right, functional safety considerations drive the need for redundant signal paths, leading to even further complexity both to the overall signal chain architecture and the underlying computations.

Except for the motion control block, deep-learning neural networks are employed across the entire ADAS signal chain. Each block has different demands regarding the required AI computing performance and the neural nets that are employed. There is an ever-growing population of neural networks that tend to differ in structure, network connectivity, and their target end application while offering different trade-offs in complexity, accuracy, and performance. What they all have in common is that the networks mirror the human brain, (hence the name neural networks), in their approach to solving a very different class of problems than traditional CPUs have for many generations. This field is rapidly evolving and hit its stride when research led to the development of neural networks that could more accurately perform object detection and recognition than a human. Hence why the application of AI in autonomous systems is very logical.

The Convolutional Neural Network CNN, which was widely employed in ADAS perception for object detection, was quickly displaced by the advent of region-based CNNs (RCNNs). RCNNs marked a significant improvement in perception performance, by focusing primarily on just a region of interest within the image vs the entire image. However, this drove up a corresponding increase in AI computational demands. Since then, newer models including Single Shot Multibox Detector (SSD) and "You Only Look Once" (YOLO), which deliver even better efficiency and improved accuracy for object detection have gained industry momentum.

Most recently, vision transformers, which borrow from neural network concepts used in natural language processing (NLP) – think Siri – are now beginning to emerge as a leading neural network considered for image classification. Vision transformers surpass the performance of Recurrent Neural Networks (RNNs), in accuracy however, this is at the expense of extended training times – which we haven’t discussed yet. But just like learning how to ride a bike, there is a training phase required before you can ride any distance without training wheels. Networks need to be trained to be able to accurately detect and recognize objects in the vehicle’s surroundings.

As another benefit, vision transformers are also less sensitive to visual occlusion. Here again, with this more advanced and accurate neural network, there is an increased cost associated with the AI computing performance required to achieve similar frames-per-second performance when compared to earlier networks.

Suffice it to say, it’s awe-inspiring to witness the almost breakneck pace at which the automotive industry is embracing state-of-the-art technologies, including neural networks, that, in some cases are not quite off the drawing room table yet, but are being embraced ultimately to improve the safety of automotive transportation.

One of the most compelling factors driving autonomous vehicles is the reduction of fatalities and accidents. More than 90% of all car accidents are caused by human failures. Self-driving cars play a crucial role in realizing the industry vision of “zero accidents.” Beyond addressing the high power consumption typically associated with the high-performance AI computing required to achieve “just” level 3 ADAS, (the power goes up considerably more for L4 & L5), the fully self-driving car must address some additional really hard challenges: It needs to be able to learn, see, think and then act. And it must do so in a wide range of weather conditions, road conditions, lighting conditions, and also do so flawlessly in a wide range of traffic scenarios.

For the vehicle to “see” (perception) and ultimately understand its surroundings, the vehicle employs a mix of different sensors which includes cameras, radar, and LIDAR (light detection and ranging). While controversy exists in the industry regarding the need for LIDAR, reduced price points and learnings from many millions of miles of trials are leading to broader industry adoption. The reason different types of sensors are employed is to offset the limitations inherent to a given sensor. - i.e. cameras don’t see well in the dark, so by creating a composite image by combining the LIDAR sensor (which works well at night) with the camera sensor, the vehicle can see at night. Unlike cameras and LIDAR, radar is not affected by fog. When radar is combined with the other sensors, the vehicle can now “see” in a wider range of driving conditions. The combining of data from the different sensors is known as sensor fusion.

The AI processing performance required for sensor fusion is relatively modest when compared to the AI performance required for perception. The Convolutional Neural Network (CNN), which is typically employed in sensor fusion, is a form of a deep learning neural network commonly used in computer vision. While the AI processing required to address aspects of sensor fusion may be lightweight, addressing other aspects of sensor fusion are quite complex.

To illustrate that point, today there are vehicles on the road that employ 11 cameras, 1 long-range radar, and 1 LIDAR, in addition to 12 ultrasonic sensors (radar). It’s important to note this collection of sensors and sensor types is employed just to achieve Level 2+ ADAS. Successfully creating a composite image through the fusion of this large number of disparate sensors is a very challenging task. Not only does the aggregated data rate of all these different sensors present significant dataflow and data processing challenges, but the cameras typically have different resolutions, frame rates, and operate asynchronously. These challenges are further compounded when fusing the LIDAR and radar images with the camera images.

4 approaches to sensor fusion commonly employed to yield a composite image include:

- Early fusion

- Mid-level fusion

- Sequential fusion

- Late fusion

Each approach describes the point when the fusion of the different sensors occurs within the fusion signal processing chain. Early fusion typically delivers higher precision at the expense of completeness of scene coverage whereas late fusion offers more coverage of the environment at the expense of precision. Mid-level and sequential fusion offers more balanced trade-offs between coverage and precision. Once fusion has been completed, the composite image is sent to the Perception engine which is responsible for making sense of the fused image.

As shown, the autonomy workload consists of 4 major tasks: perception, prediction, planning, and control. Level 4 autonomy, where a vehicle can operate in self-driving mode with minimal human interaction, requires autonomous driving tasks (e.g. scene understanding, motion detection, image inference tasks, etc.) to be executed continuously with significant consideration for functional safety. While the above signal chain shows a linear signal flow from left to right, functional safety considerations drive the need for redundant signal paths, leading to even further complexity both to the overall signal chain architecture and the underlying computations.

Except for the motion control block, deep-learning neural networks are employed across the entire ADAS signal chain. Each block has different demands regarding the required AI computing performance and the neural nets that are employed. There is an ever-growing population of neural networks that tend to differ in structure, network connectivity, and their target end application while offering different trade-offs in complexity, accuracy, and performance. What they all have in common is that the networks mirror the human brain, (hence the name neural networks), in their approach to solving a very different class of problems than traditional CPUs have for many generations. This field is rapidly evolving and hit its stride when research led to the development of neural networks that could more accurately perform object detection and recognition than a human. Hence why the application of AI in autonomous systems is very logical.

The Convolutional Neural Network CNN, which was widely employed in ADAS perception for object detection, was quickly displaced by the advent of region-based CNNs (RCNNs). RCNNs marked a significant improvement in perception performance, by focusing primarily on just a region of interest within the image vs the entire image. However, this drove up a corresponding increase in AI computational demands. Since then, newer models including Single Shot Multibox Detector (SSD) and "You Only Look Once" (YOLO), which deliver even better efficiency and improved accuracy for object detection have gained industry momentum.

Most recently, vision transformers, which borrow from neural network concepts used in natural language processing (NLP) – think Siri – are now beginning to emerge as a leading neural network considered for image classification. Vision transformers surpass the performance of Recurrent Neural Networks (RNNs), in accuracy however, this is at the expense of extended training times – which we haven’t discussed yet. But just like learning how to ride a bike, there is a training phase required before you can ride any distance without training wheels. Networks need to be trained to be able to accurately detect and recognize objects in the vehicle’s surroundings.

As another benefit, vision transformers are also less sensitive to visual occlusion. Here again, with this more advanced and accurate neural network, there is an increased cost associated with the AI computing performance required to achieve similar frames-per-second performance when compared to earlier networks.

Suffice it to say, it’s awe-inspiring to witness the almost breakneck pace at which the automotive industry is embracing state-of-the-art technologies, including neural networks, that, in some cases are not quite off the drawing room table yet, but are being embraced ultimately to improve the safety of automotive transportation.